Below is some basic training to help journalists identify potentially bad research being presented by policy groups.

DATA QUALITY and METHODOLOGY:

These are the two key factors in good research. If both are not sufficient, then neither are the results of the study.

DATA QUALITY: There are “gold standard” data sources such as the CDC mortality databases, the FBI’s crime reporting system, Census Bureau for anything related to demographics (states, county or city), the UN Drug and Crime datasets, and the Small Arms Survey. We also favor the Violence Project’s mass public shooting database, as they have been given unprecedented access to perpetrators’ backgrounds (such as their mental health and treatment status).

! When presented with research, ask what raw data sources were used. If they are not gold standard, if they were created by a policy group, or if the sources have no significant use elsewhere, then the study is suspect.

Some researchers do primary research and do develop new, quality information. For example, in 2022, Professor William English of Georgetown University conducted a large-scale survey to determine, among other things, state level gun ownership rates. Before then, most estimates of gun ownership rates were derived by proxies.

! When presented with new primary data, ask for the data gathering methodologies and the sample size. Small sample sizes are statistically inaccurate, and inappropriate data mining (such as surveying only NRA members, only Republicans, or only high income earners) would pollute the results.

One recurring problem is state-level “gun law scorecards” published by advocacy groups. These scorecards have no basis in statistical criminology, and are often just policy group wish lists. Using them as a metric is pointless as there is nothing underpinning the scores and grade. For example, one policy group gives California an “A” for their gun laws, but gives Kansas an “F,” though both states have nearly identical gun homicide rates.

METHODOLOGIES: There are appropriate and inappropriate methodologies. In recent years, the number of studies using inappropriate methodologies has gone up.

Because methodologies are complicated, because their descriptions get buried in studies, and because some statistical mathematics are complex, it is easy to get misled.

In recent times, the following inappropriate methodologies have been recurring:

Synthetic modeling: These methodologies create crime statistics that never existed by creating mathematical models based on (often dubious) assumptions and data sources. Modeling is appropriate when data is scarce, but in the United States we have robust crime data reporting. Hence, this approach is not appropriate.

Quasi-experimental: This methodology is an experiment, but unlike a true experiment, a quasi-experiment does not rely on random data assignment (which is what you would obtain from raw crime data). These have roles in fields where the nature of relationships have to be inferred because they cannot be measured directly, which is not the case in most gun violence data.

|

Incomplete variable testing: There are many variables that can be tested against crime and laws. The most frequent arguments among academics concern which variables should be tested. Not testing obvious variables is now a common inequity.

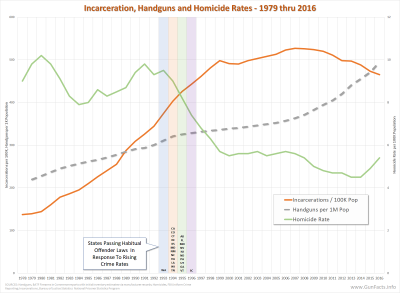

For example, in a 2023 study looking just at gun control laws concluded that the volume of strict gun control laws caused homicides to fall after 1993. The authors did not incorporate the fact that 24 states, including populous California, passed habitual offender laws (e.g., three strikes) and removed many bad actors from further public violent crime.

Gun-only focus: Suffocation suicide rates are rising faster than gun suicide rates. Hence, any study that focuses only on gun suicides would be incomplete. The same can be said for gun-only homicides and gun-only accidental deaths.

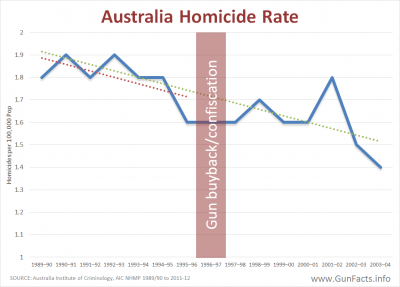

Baseline fallacy: This is where a researcher starts their analysis at a point in time and infers cause/effect, without examining what was happening before the point in time. As an example, some researchers credit the 1996 era changes in gun laws in Australia for lowering homicide rates. But homicide rates were falling before 1996, and at the exact same rate down to two decimal places.

PEER REVIEW

Peer review is not always reliable. The issues include inappropriate journal selection, paper filtering, and the general decline due to profiteering.

Inappropriate journal selection

In recent years, medical schools and some in the medical field have published poorly constructed criminology investigations in medical journals (we named this “criminology malpractice”).

The problem is that editors and judges working for medical journals lack the training and background to see flaws in these studies – much as the judges on a criminology journal would lack the training for reviewing an epidemiological study of pathogens.

We have yet to encounter a single gun policy paper proffered by a medical school, organization or professional that has been submitted to or accepted by a criminology journal.

Paper filtering

Likewise, it is well understood that journal editors choose what papers go under peer review. We are loath to accuse any editor of rejecting papers that disagree with their political beliefs. But Robert Higgs, a university professor, researcher, writer, and editor, notes, “Any journal editor who desires, for whatever reason, to reject a submission can easily do so by choosing referees he knows full well will knock it down; likewise, he can easily obtain favorable referee reports.”

This may also be why medical professionals operating outside their field of expertise publish in medical journals: the editor’s sympathies may be aligned.

General decline due to profiteering

Most journals are profit centers. We know this because a portion of the Gun Facts budget goes to buying journal articles, which are nearly all pay-to-read. This may be contributory to the acceptance of articles for volume instead of quality. This may be the case of the recent news of fraudulent papers being accepted.

Our point

Journalists need to ignore any “peer-review” label and dig at least one level deeper to understand if the paper being proffered has good data quality and good methodologies. “Peer-reviewed” is no longer a safe criteria to use.

Other Research Issues

There are other issues that should give journalists pause before covering any study.

Pandemic, George Floyd, police pullbacks

2020 saw several unprecedented events that caused crime rates to fluctuate wildly. As such, studies of data series that include 2020 and/or 2021 are highly suspect. Any trend analysis that starts in 2020 is hopelessly flawed.

Some criminology papers explored how the pandemic, the Floyd riots and “defund the police” initiatives facilitated more street crime (two we have reviewed include “Did De-Policing contributes to the 2020 homicide spikes?” and “Explaining the Recent Homicide Spikes in U.S. Cities”).

Suicides and gun ownership proxies

Prior to the aforementioned large-scale gun ownership survey, many people – including a few criminologists – used gun suicide rates as a proxy for gun ownership rates, accepting sundry inequities of the approach (i.e., urban vs. rural gun ownership variation).

The problem is that some advocacy groups used the “suicide proxy” for gun ownership rates to explore the effects of gun ownership rates on suicides. This is “circular mathematics” and quite inappropriate.

A resource for you

Gun Facts has a page on its website where we list and briefly explain the problems with some research we have encountered over time (see the “Bad Research Roster” under the “Research” menu item). You can use this page to see if any policy group is feeding you previously published bad research, or if you need to cross-check papers cited as being authoritative in new studies tossed your way.