Gun Violence Archive Assessment

The Gun Violence Archive (GVA) is not a gold-standard data source. It isn’t even a reliable source. Yet it is commonly, and tragically, cited by people in the media who don’t know enough about gun violence data and criminology to understand why they should avoid citing GVA.

We’ll attempt to clarify that.

Take-aways about GVA

- History of data quality issues

- Incomplete scope of data

- Invents terms that do not comport with established definitions

- No transparency on operations and methods

What the Heck is GVA?

According to some, GVA is a real-time database of gun violence and an important research tool. To others it is an insult to serious researchers and a detriment to public education, and a naked agitprop tool.

The truth is in between.

We’ll start by saying we do not read into the GVA’s motives. We won’t classify them as saints, sinners, geniuses or morons. We’ll leave that for Twitter mobs.

But GVA never has been an academically serious source for data. Their history, such as it is, paints a picture of people who were ill-equipped to take on such a mission as they did, and who despite improvements, are still way below the bar for gold-standard data.

Indeed, no data source is pristine. Take for example the FBI’s supplementary homicide reports (SHR), which are considered gold-standard… until you notice that Florida supposedly has no homicides every year. This is because Florida does not forward their homicide data to the FBI, instead posting it to their own websites. But the FBI’s data collection procedures are uniform and have been consistent for decades, which is one of the pillars of data quality.

The same cannot be said about GVA.

GVA and data quality

In their earliest years, GVA was basically crowd-sourced news scraping. There appeared to be few, if any, real data quality controls.

We’ll leave it to your Google skills to find the rosters of original complaints, but people who tore through GVA’s data noted:

- Events with multiple entries (i.e., one shooting, counted repeatedly)

- Missing events

- Self-defense shootings included as if they were criminal (no designations)

- No follow-up concerning charges not being filed (e.g., cops determined it was a good shoot), and district attorneys or courts dismissing charges that would reclassify events

Some of these problems may still exist (we won’t do an exhaustive discovery, but enough oddities caught our eyes to make us suspicious, such as one event being classified as both a murder and a self-defense non-shooting).

Indeed, if you do a directed search into the GVA website, Google cannot find any mention of GVA’s data quality processes or procedures. Even their “general methodology” page (archived here) never uses the word quality.

Indeed, if you do a directed search into the GVA website, Google cannot find any mention of GVA’s data quality processes or procedures. Even their “general methodology” page (archived here) never uses the word quality.

All that said, they do claim to have abandoned crowd sourcing in favor of “a dedicated, professional staff,” though there is no clarity on what constitutes “professional” in GVA’s lexicon.

Transparency and data quality

It is this last point that is most concerning. To believe in data quality, one must:

- Understand the data sources

- Approve of the scope of data sources

- Understand the processes in which data is gathered, categorized, encoded, etc.

If you have an afternoon to waste, dig through the FBI’s Uniform Crime Reporting (UCR) system, and their newer National Incident-Based Reporting System. The processes are documented to a mind-numbing degree, and at least for the aged-out UCR system, consistently uniform over a very long time.

This will be one of our two principal points for rejecting GVA as a data source. Transparency leads to critique, which leads (hopefully) to improved quality. GVA appears disinclined to both.

Scope of sourcing

GVA claims that they are “utilizing up to 7,500 active sources,” but they do not disclose a list of those sources (not even Google can find the list of GVA sources using directed searches into the GVA website, though BARD does suggest in might include social media and “tipsters”). They note that it is a mix of media and law enforcement, but GVA doesn’t even disclose the rough ratio between these.

This is important because even that 7,500 number is low.

The FBI’s Originating Agency Identifier (ORI) database shows there are nearly 36,500 law enforcement agencies in the United States. Even if all of GVA’s 7,500 sources were law enforcement, that would represent 20% of all agencies, and since GVA started life as a news website-scraping operation, we doubt that even the majority of GVA’s sources are law enforcement.

All this circles back to GVA’s general lack of transparency. In short, with limited data sources, undisclosed sources, undocumented methodologies, and a poor early history of data quality, GVA lacks any trust-based standing in the field of gun violence research.

GVA’s inappropriate definitions

This is where GVA draws the wrath of people familiar with propaganda tactics.

It is well understood by propaganda practitioners that if the data doesn’t favor you, change the definition of the measurement. Amtrak famously improved their lousy on-time record not by improving operations, but by changing the definition of “on-time.”

We won’t accuse GVA of malevolent intention. But they have done the public a grave disservice by muddling public understanding of gun violence by inventing similar sounding terms to established criminology definitions, and by revising underlying definitions.

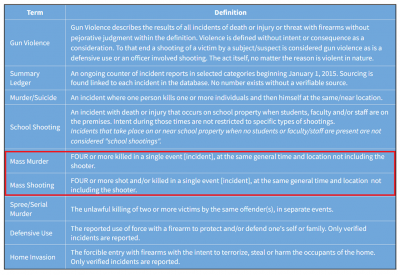

This table, from the GVA website, gives but one example.

There is an established definition for “mass PUBLIC shooting” (4+ killed not including the perpetrator, one public location, not associated with another crime). GVA invented their own version of the term “mass shooting,” omitting the word “public.” Journalists who were not educated on the nuances then proceeded to misreport the nature of mass PUBLIC shootings.

Even GVA’s definition of “mass shooting” starts injecting irregularities, such as the murders being in the same “general area” (hopelessly vague definition) and same “general time” (equally vague). This inappropriate approach has led to cases where someone may have killed their spouse at home in the morning, a patron at a bar that afternoon, then two people in the neighborhood that night. This is not a mass public shooting and thus is a horrifically invalid data definition.

But the BIG gotcha is not time and place, it is people killed or injured. Note that for “mass shooting” GVA includes people who were “shot and/or killed.” Hence, their 4+ definition might include four people wounded, none killed. The problem GVA created, intentionally or not, is that reporters and activists are now conflating “mass PUBLIC shootings” (4+ killed) with GVA’s “mass shooting” (4+ wounded … or killed).

GVA = Gun Variables Altered

The quick point is that between:

- Poor data quality (at least in the beginning)

- Limited data sources

- Lack of transparency

- Unwillingness to use standard definitions (i.e., mass public shootings)

… nobody should cite GVA. They fall far below gold-standard quality and their lack of definitional fidelity makes their motives suspect.

Excellent breakdown of the weaknesses in the GVA, thank you.

Not surprisingly, I’m confident that the media will continue to parrot their numbers (I can’t even call it “data”). It is much like people who have “researched” Covid on Facebook.